Beyond the eye - decoding the ocular fundus

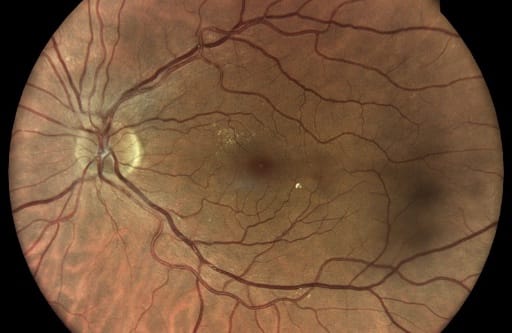

This article reviews the challenge to extract information on our physical health from fundus images. The fundus is the inner surface of our eye and can be examined by taking a funduscopy (i.e. camera image). Funduscopy is a quick, cheap and non-invasive examination to determine our ocular health and directly observe microcirculation in our eyes. From these observations, clinicians can draw conclusions beyond the eye about our overall physical health.

In recent years, there has been an increasing effort to train machine learning models to find biomarkers and detect pathologies on retinal fundus images. This post is intended to provide an overview of these research efforts as of early 2022. Feel free to jump to the key findings at the bottom of the article.

In 2018, a group of researchers at Google were among the first who used deep learning models to extract and quantify cardiovascular risk factors from retinal images (Poplin et al. 2018). They assembled a large dataset with >200,000 images from multiple sources (UK Biobank and EyePACS) and trained a rather standard convolutional neural network (CNN) to predict age, sex, smoking status, systolic blood pressure and adverse cardiac events. I find it especially interesting that they were able to predict both age (mean absolute error 3.26 years) and sex (AUC = 0.97) very well: Ophthalmologists cannot tell a person's sex or age from a fundus image. Therefore, this paper is a prove point that ML systems can decode information from the retina that humans can't. Other groups worked on the prediction of ischemic stroke and atherosclerosis. Mitani et al. 2020 were able to detect anemia with a good AUC of 0.88, again on the UK Biobank dataset and validated on an external Asian dataset. In their model, the authors combined both patient metadata and fundus images. One standard measure to quantify risk for cardiovascular disease is coronary artery calcium (CAC) scoring using CT images. However, CT scanners emit ionizing radiation and the scans are much more expensive to acquire than fundus images. Rim et al. 2021 proposed CAC scoring based on retinal fundus images. They were able to achieve similar risk stratification for cardiovascular events as the CT-based CAC scoring method evaluated on several large datasets using cohorts from several ethnicities. Cheung et al. 2021 trained a CNN to measure retinal vessel caliber that showed good agreement with human raters and may quantify the narrowing of arterioles, which is associated with CVD risk. Most recently, Mueller et al. 2022 were able to identify peripheral occlusive disease from retinal fundus images. Interestingly, many patients in their dataset were asymptomatic when the fundus image was taken, so the ML model could detect the disease very early in these cases.

The only paper I found on neurodegenerative disease phenotyping from fundus images was a very recent one by Tian et al. 2021: Based on UK Biobank data, they analyzed the vascular structure and were able to classify Alzheimer's disease. The activation maps of their models showed that structures adjacent to blood vessels were most salient for classifying Alzheimer's. However, the dataset contained only 122 pathological cases. The problem for all ML approaches to image phenotyping is that there are hardly any rich datasets containing fundus images and long follow-up data with comprehensive clinical information.

Besides cardiovascular and neurodegenerative there are several works on systemic biomarker prediction. Liu et al. 2019 estimated biological age which showed a smaller error than biological age estimation from brain MRI scans which are also more expensive to acquire. However, the clinical value of biological age and the definition of a ground truth for training a model are not clear to me. Another work by Rim et al. 2020 predicted several systemic biomarkers such as body composition, kidney function and blood pressure. These were much harder to predict than age and sex, i.e. the performance was considerably lower. Interestingly, they also reported their results drilled down to cohort ethnicities. It turned out that the models trained on the fundus images of an Asian population performed worse when used for inference on a European cohort. Such evaluations are highly interesting and important for ML systems because they make biases transparent and help address them in the future. I'm wondering whether an ML system could even tell a person's ethnicity from the fundus image. This may help to make sure that a model is only used with populations that it has been optimized for. Finally, Zhang et al. 2021identified patients with chronic kidney disease and type 2 diabetes based on retinal fundus images. They used 6-year follow-up data in a cohort and compared ML models that were trained on both clinical data and fundus images, achieving high AUCs for the multimodal models. This is a very interesting paper because it shows the potential of ML systems when used with follow-up data.

Here are my key findings from working through these studies (and some more that I didn't mention).

Key Findings

- Further progress is limited by the amount and quality of data: Most of the papers mentioned above, despite originating from many different regions of the world, used data from UK Biobank. This indicates a lack of high-quality data, which is the central factor that limits further progress and stronger evidence. Specifically, datasets should be labeled consistently, they should include detailed metadata on the patient and the image quality should not be compromised. In addition, data should be acquired from multiple centers and ethnicities. The UK Biobank data is an invaluable resource for research and I'm looking forward to results from similar initiatives like the German NAKO Studie.

- Machine learning methods are not the limiting factor for further progress: Most approaches are based on rather „standard“ CNN architectures that are tweaked with domain knowledge. The difference between the best and second-best architecture in terms of standard computer vision benchmark performance (i.e. ImageNet) only comes into play if trained with really huge datasets (100Ks to 1Ms of images). This is not yet the reality in most medical AI applications...

- Clinical applications of ML systems operating "outside of the eye" are mostly in cardiovascular risk quantification: It seems that microcirculation of the fundus contains rich information on cardiovascular health. Even the ML system that aimed to predict Alzheimer's disease relied mostly on image features derived from the vascular system in the eye. Generally, there is very little work on fundus image phenotyping for neurodegenerative diseases. However, this is subject to publication bias.

- Domain shift and ethnic bias are (still) serious problems: It has been shown in several papers that domain shift and bias concerning ethnicities degrade the performance of ML models considerably. However, most works trained their models on data from one region and used a different population for validation. This makes sense to quantify bias. However, I could imagine that mixing the datasets (i.e. data from different ethnicities) between training and validation can reduce bias. For future clinical applications of ML models it must be ensured to only use models in populations that they have been optimized for.

- There is very little work on longitudinal and prognostic models so far: I had hoped to find more works based on longitudinal data in this field, but there are almost no datasets with follow-up. This is a pity because it may enable earlier risk identification or diagnoses. To me, it seems very interesting to train ML systems that can detect changes very early, maybe even before they are visible to the human eye.

To conclude, there is early evidence suggesting that fundus images contain even more information beyond the eye. The key to decoding it is to acquire larger high-quality datasets and further tighten collaborations between the machine learning and medical communities.